Lessons learned from KAFKA Integrations using the Advantco KAFKA Adapter for SAP Integration Suite

1. Consumer Group and Consumers

Understand the concept of consumer groups and their importance in load balancing and parallel processing. Properly configure the number of consumers in a group to optimize message consumption and prevent overloading individual consumers. This ensures efficient utilization of resources and faster message processing.

2. Rebalancing Issues

To avoid frequent rebalancing during polling, enable consumers to keep their connections alive. The Connection Keep Alive value MUST be set greater than the polling interval to minimize disruptions and maintain a stable consumer group. This helps reduce unnecessary rebalancing, improving overall system performance.

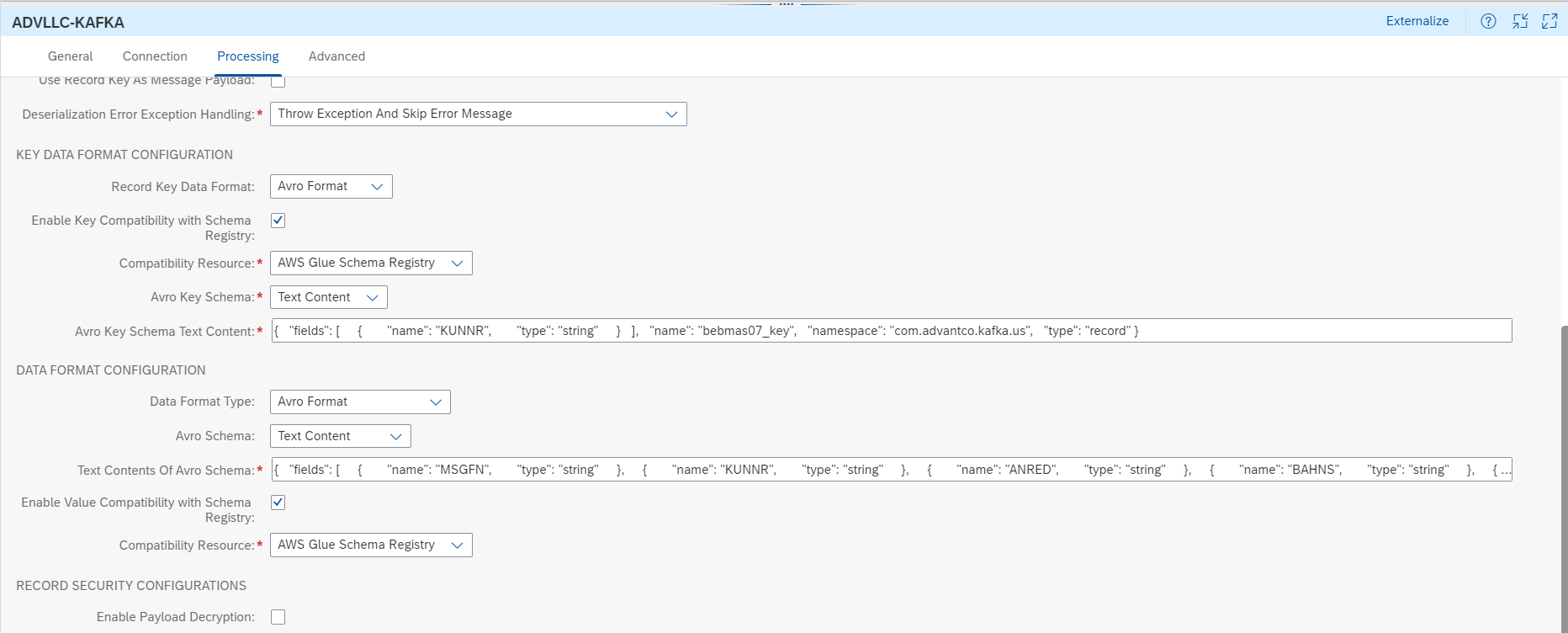

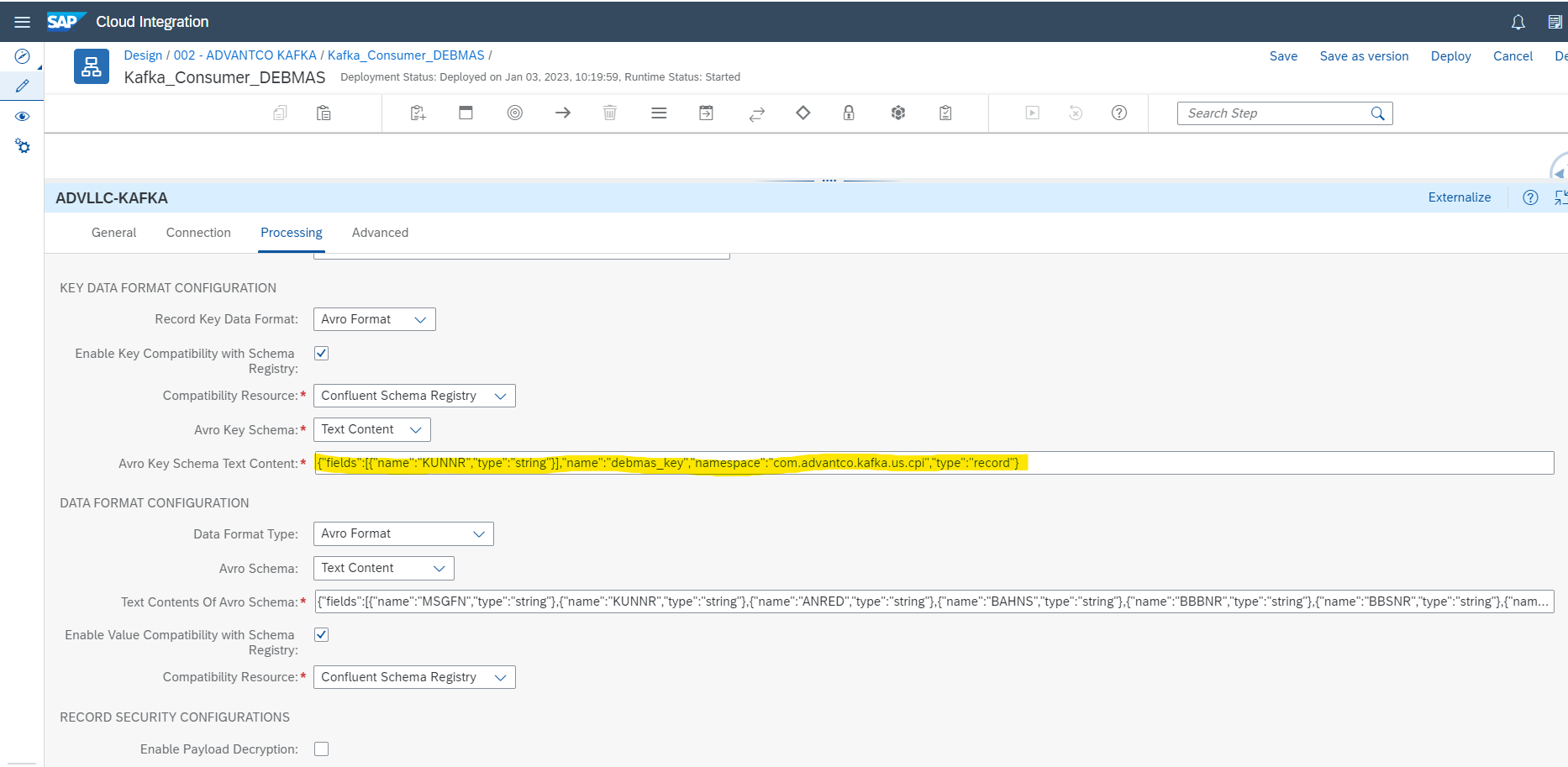

3. Schema Registries

Implement schema registries to manage data schemas for Kafka topics. By defining and centrally managing schemas, data validation becomes more robust, enabling seamless integration between Kafka and SAP applications. Schema registries ensure data compatibility and evolution over time. It is critical that the KAFKA adapter supports different schema registries like AWS Glue Schema Registry or Azure Schema Registry.

4. Tombstone Record

Utilize tombstone records to delete or mark data for deletion within Kafka topics effectively. Tombstone records act as placeholders to indicate that a message with a specific key should be considered as deleted. Proper handling of tombstone records ensures accurate data synchronization with SAP applications.

5. Resetting Topic Offset

Understand that a topic offset can be reset to a specific position, allowing consumers to reprocess messages from a designated point in the topic. This feature can be valuable for scenarios such as reprocessing data or recovering from failures without starting from the beginning.

6. Enabling Auto Commit

Consider enabling auto commit for consumers as it can improve performance and throughput by automating the offset commit process. However, carefully evaluate the trade-offs between committing offsets automatically and the risk of potential data loss during failures.

7. Handling Strange Characters

Be prepared to handle record headers or record keys with strange characters when dealing with third-party producers. Ensure that your consumer applications can process such data gracefully, avoiding any issues with character encoding and data integrity.

8. Ordering

Utilize record keys to maintain the order of messages within Kafka topics. Leveraging record keys ensures that messages with the same key are processed in sequence by the same consumer, promoting data consistency and reliability. For example, to order customer master events, use the SAP customer number as value of the record key.

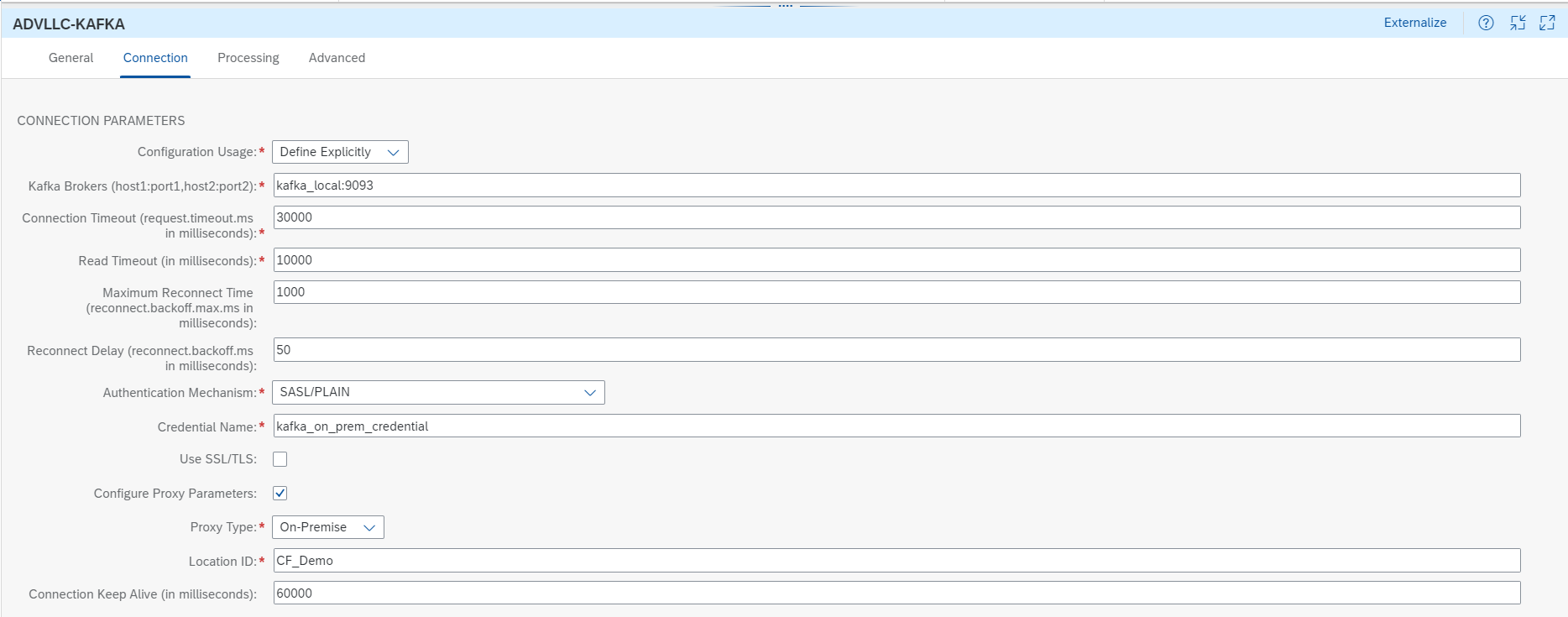

9. SAP Cloud Connector

Implement the SAP Cloud Connector to facilitate seamless and secure integration between on-premises Kafka clusters and cloud-based services within the SAP Integration Suite. The Cloud Connector ensures data privacy and connectivity across hybrid cloud environments, enabling smooth data flow.

10. Compatible with Different Brokers

Compatibility with different Kafka brokers is essential for seamless data integration, vendor flexibility, and building robust, future-proof data pipelines within a distributed system. It enables organizations to take advantage of the strengths of various brokers while avoiding vendor lock-in and improving overall data ecosystem efficiency.

11. Releases

Ensuring you stay up to date with the KAFKA client library is vital, as it allows you to stay current with bug fixes, leverage new features, and benefit from performance improvements.

12. Note the Difference

Keep in mind that Azure Event Hubs consumer groups and Kafka consumer groups are different, and understanding their distinctions is essential for successful integration.

13. Performance Tuning

Continuously optimize Kafka's performance by adjusting configurations, conducting hardware upgrades, and scaling Kafka clusters as needed to handle increased loads.

14. Security Considerations

Implement strong security measures, such as encryption, authentication, and authorization, to protect data both in transit and at rest within the Kafka ecosystem.

15. Data Transformation and Enrichment

Consider data transformation and enrichment during ingestion to improve data quality and streamline downstream processing.

16. Version Compatibility

Ensure compatibility between all components within the Kafka ecosystem to avoid potential issues related to different Kafka versions.

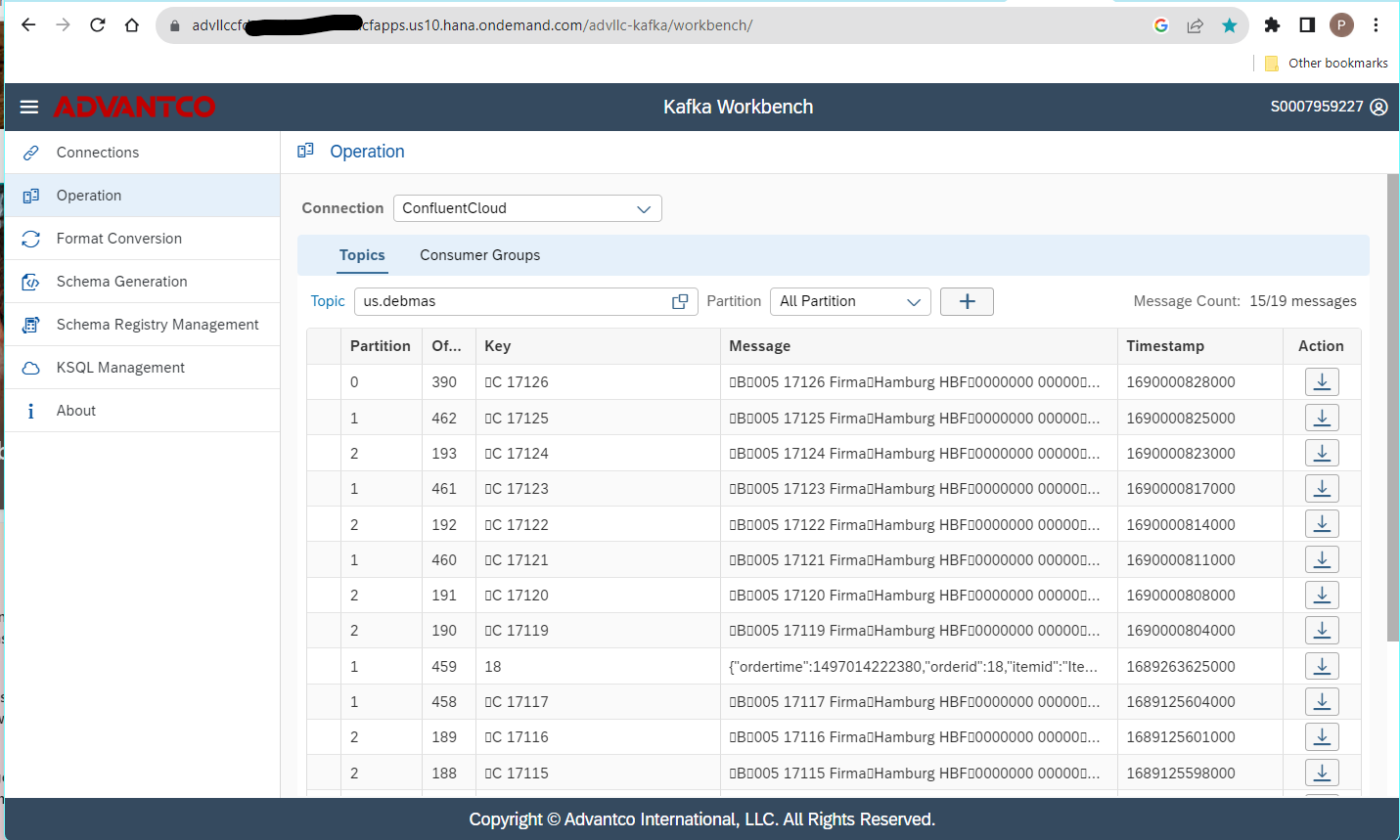

17. Preparation & Troubleshooting

Utilize the Advantco KAFKA Workbench for the preparation process, including setting up connections to the broker and implementing JSON <-> XML conversion rules. The Workbench proves to be highly beneficial for troubleshooting, granting access to the topics.

18. Leverage Advantco Support

Make use of Advantco's support and resources to address any challenges or inquiries related to KAFKA configurations. Their experiences with many KAFKA integration projects can be valuable in ensuring a smooth and successful implementation.

By following these lessons learned, you can optimize your Kafka integration with SAP Integration Suite and achieve a more robust and efficient data processing system.

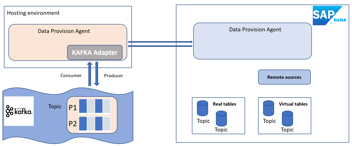

Integration between Kafka and SAP can be a challenge and often requires a custom solution. Advantco’s Kafka adapter eliminates the challenge of integrating SAP and Kafka by using your existing SAP framework for seamless and reliable integration. The Advantco Kafka adapter is fully integrated with SAP allowing you to keep your existing SAP workflows and business processes. Connections can be configured, managed, and monitored using the standard SAP Process Orchestration & SAP Integration Suite (formerly CPI) tools. This deploy-and-run integration solution is more cost-effective(best practices, adaptable to many business use cases, less maintenance, future-proof) than custom integration and comes with Advantco first-class support and Kafka integration expertise.

Learn More about SAP and Kafka integration with an Advantco Kafka adapter.

.png?width=900&height=186&name=Advantco%20logo%20AAC%20V1%20Ai%20file%201%20(1).png)

.png)

.png)