Apache Kafka Integration

With capabilities like real-time data processing, fast, scalable, durable, and fault-tolerant publish-subscribe messaging system, it is not at all surprising that more than 1⁄3 of all Fortune 500 companies uses Kafka. Companies like LinkedIn, Microsoft Uber, Netflix, Dropbox, and many more are all using Apache Kafka to make business decisions in real-time. These companies use data collection systems for nearly everything from business analytics to near-real-time operations, to executive reporting.

There are many reasons for the huge success of Apache Kafka, the foremost being that it offers the best solution to a problem that all companies have: processing data in real-time. Companies use Apache Kafka as a distributed streaming platform for building real-time data pipelines and streaming applications. Apache Kafka is horizontally scalable, fault-tolerant, and fast. The most important reason for Kafka popularity is that “it works and with excellent performance”

Kafka relies on the principles of Zero-Copy. It relies on the file system for the storage and caching, uses Sequential I/O to get a cache without writing any logic this is much better than maintaining a cache in a JVM application. Kafka enables you to batch data records into chunks. Batching allows for more efficient data compression and reduces I/O latency, reduces network calls, and also converts a lot of random writes into sequential ones.

Use Case:

Advantco KAFKA adapter enables you to exchange data between non-SAP systems and the Integration Server or the PCK by means of KAFKA APIs.

Benefits of the Advantco Kafka Adapter

The advantage of the Advantco Kafka Adapter is that the Adapter is fully integrated with the SAP Adapter Framework, which means the Kafka connections can be configured, managed and monitored using the standard SAP PI/PO & CPI tools. With SAP scalability and stability, Advantco Kafka Adapter ensures the highest level of availability. The Adapter is also fully integrated with the SAP logging/tracing framework.

- Integration with SAP NetWeaver®: The adapter is built on the SAP NetWeaver® Adapter Framework

- Easy and quick installation by importing an SWCV transport (.tpz) into the Integration Repository/Enterprise Service Builder and deploying the J2EE software archive (SDA or SCA) via the J2EE SDM/JSPM.

- Integration with SAP NetWeaver® Runtime Workbench and SAP NetWeaver® J2EE Logging/Tracing. Supporting SAP NetWeaver PI/PO 7.5, PI 7.4, & SCPI.

- Configuration for the target topics and how to read streams of data

- Configuration for polling

- Adapter Specific Message Attributes

- Content Conversion

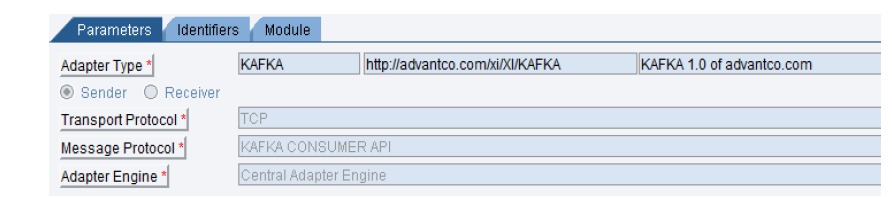

A Sender KAFKA adapter and a Receiver KAFKA adapter are available:

The Sender KAFKA adapter consumes messages from Apache KAFKA Server and then forwards message payloads to the Integration Server or the PCK. The Receiver KAFKA adapter sends message payloads received from the Integration Server or the PCK to an Apache Kafka Server.

The adapters are configured in the Integration Builder or the PCK as communication channels.

The Sender KAFKA Adapter

The Sender KAFKA adapter must be configured as sender channels in the Integration Builder or the PCK. In a multi-cluster environment, the Sender KAFKA adapter implicitly guarantees that there is only one session active among all server nodes at a time.

A sender KAFKA adapter is used to consume data from Kafka brokers then it sends data to the Integration Server or the PCK.

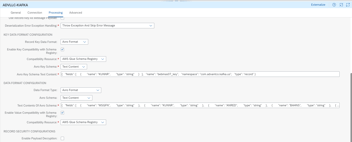

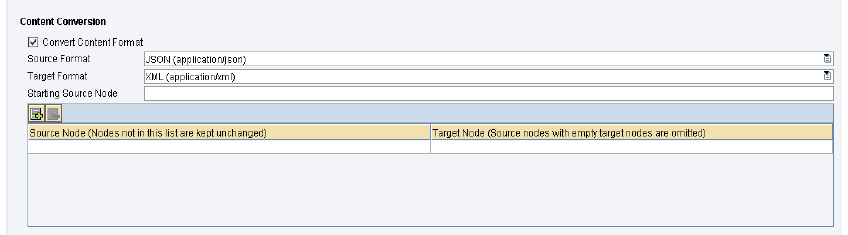

Content Conversion

Content conversion parameters are for converting main message payload between Plain, JSON and XML formats.

The Receiver KAFKA Adapter

The Receiver KAFKA adapter must be configured as receiver channels in the Integration Builder or the PCK. Receiver KAFKA channel sends message payloads received from the Integration Server or the PCK to Kafka Server.

KAFKA PRODUCER API

Producers are used to publish messages to one or more Kafka topics. Producers send data to Kafka brokers. Every time a producer publishes a message to a broker, the broker simply appends the message to the last segment file. Actually, the message will be appended to a partition. Producer can also send messages to a partition of their choice.

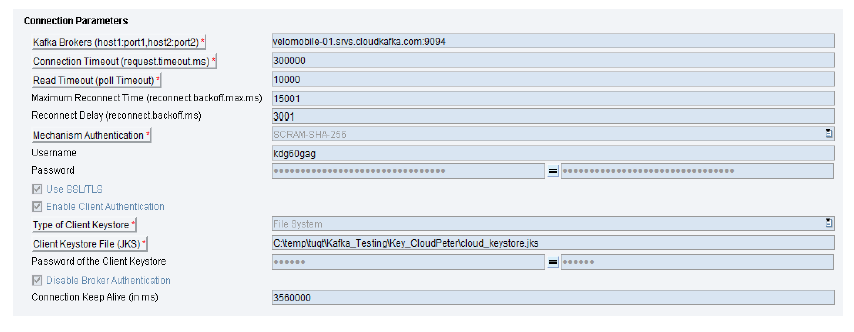

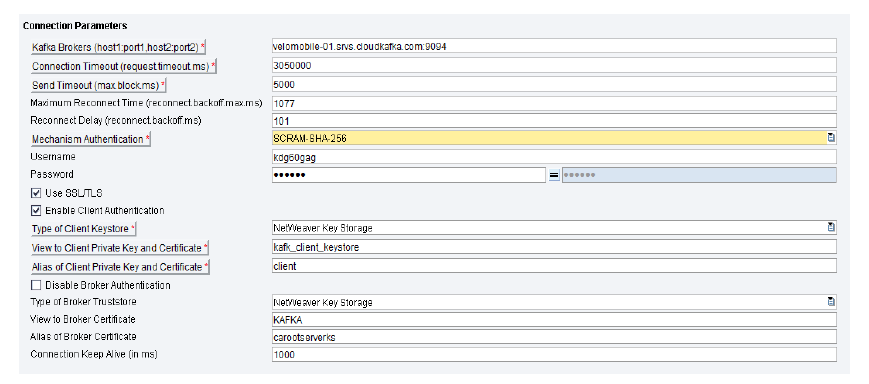

Channel Configuration Parameters

Connection Parameters

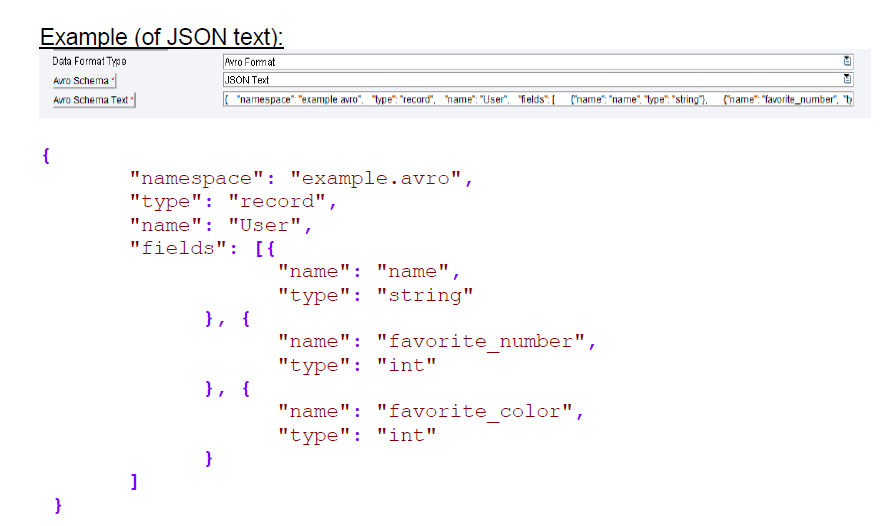

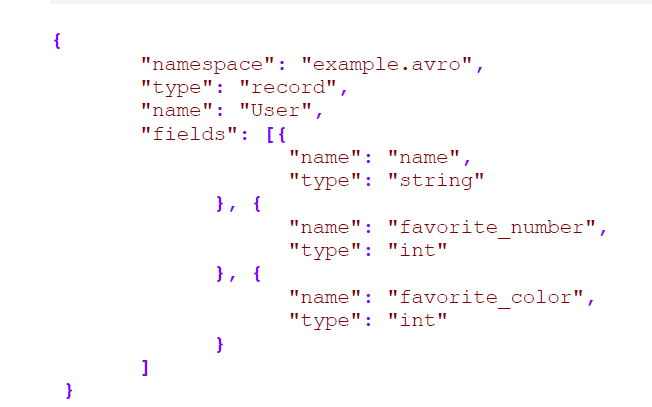

In Avro format: users are able to specify Avro schema in either JSON text directly on the channel configuration or a file path to Avro schema.

Example (of JSON text):

Advanced Kafka Configuration Parameters

There is the place to configure advanced options for the adapter.

Enable Advanced Kafka Configurations

If the basic parameters provided above by us do not fulfill your requirements to deal with Kafka brokers, then you have to enable this checkbox and specify the supported configurations for Kafka producers. The configuration key is the same one as supported by the native Kafka documentation.

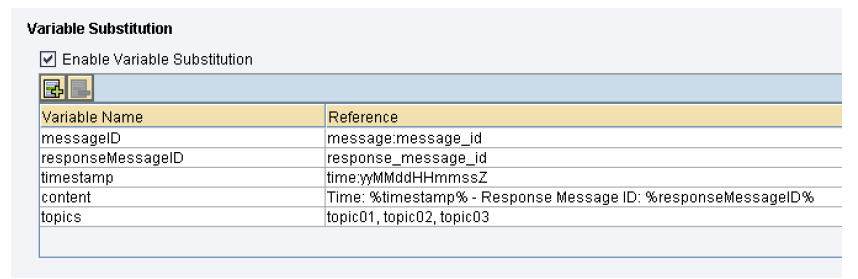

Variable Substitution

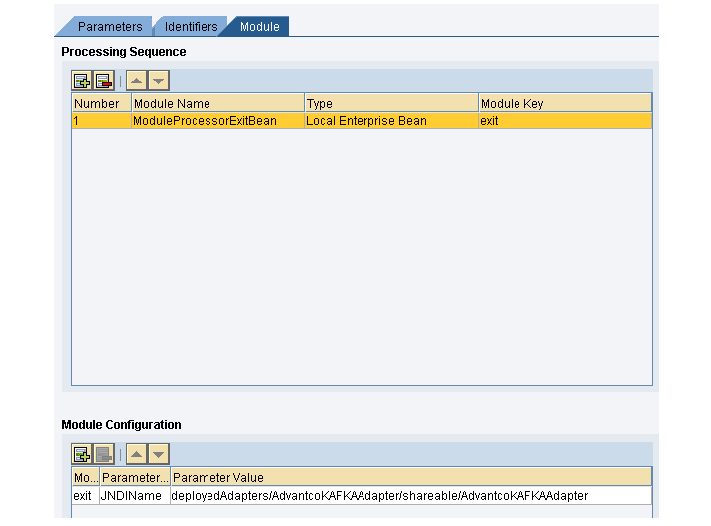

Adapter Module Configuration

The Receiver KAFKA adapter requires SAP’s standard module ModuleProcessorExitBean to be added into its module chain as the last module. On Module tab of the adapter configuration in the Integration Builder, add a new row into of the Processing Sequence table at the last position:

- Module Name: ModuleProcessorExitBean

- Module Type: Local Enterprise Bean

- Module Key: Any key

Logging and Tracing Configuration

The adapter uses standard SAP Logging API to write log and trace messages to standard log and trace files.

- Log messages are for users to get information of adapter execution, including information of adapter processing errors.

- Trace messages are for Advantco’s developers to look deeply into adapter execution, which is helpful for debugging purposes.

Conclusion:

Apache Kafka was developed to handle high volume publish-subscribe messages and streams. It was designed to be durable, fast, and scalable. It provides a low-latency, fault-tolerant publish and subscribe pipeline capable of processing streams of events. The Advantco Kafka Adapter enables you to exchange streams of data with the integration server or PCK by means of Kafka APIs.

Key benefits include

- Support for a variety of security options for encryption and authentication.

- Support for KSQL and Streams APIs.

- Schema Registry integration.

- Support for payload encryption/decryption.

- Support for free-structure, Avro-structure, Protocol Buffers-structure, JSON-structure, Parquet-structure payloads.

Fully compatible with 0.11.0, 1.x and 2.x brokers.

Please reach out to our sales team at sales@advantco.com if you have any questions.

.png?width=900&height=186&name=Advantco%20logo%20AAC%20V1%20Ai%20file%201%20(1).png)

.png)

.png)